a place becomes an escape from time

for Aloïse Gallery, Basel

A place becomes an escape from time (working title) combines Artificial Intelligence and sound sculpture. An AI is trained on my dream journals from the past years (first confinement, and then pregnancy), and generates its own dreams, which it speaks. Speakers are embedded in an abstract, membranous, corporeal sculpture. The piece considers which forms of consciousness are capable of creating art.

More specifically, I work with a Generative Adversarial Neural Network (GANs) and to generate texts based on a Small Language Model, and “Sensitive Artificial Listeners” (emotive speech bots) to speak them. Neural networks work by noticing patterns in written text (or pixels, or sound files, etc.) and then repeating those patterns in the generation of new content. Models are “trained” based already existing data, and attempt to mimic this data as accurately as possible without understanding the meaning of the data. This leads to results such as the infamous “deep dreaming” images, which produce warped digitally-rendered images. In this project, a similar algorithm will be fed the texts of my dreams, in order to create its own dream narratives. The artist’s intervention is in the tweaking of the algorithms and in the generation of the training data.

The next phase of the project will use “Sensitive Artificial Listeners” (SALs). SALs are a type of AI, which have been the subject of some of my scholarly research, and which attempt to code vocal emotion based on tiny inflections in the voice, and then to respond to these emotions with artificial voices that cause the listener to feel calmed, energized, empowered, etc. I will make use of some open-source code for these Sensitive Artificial Listeners to general the audio of the texts.

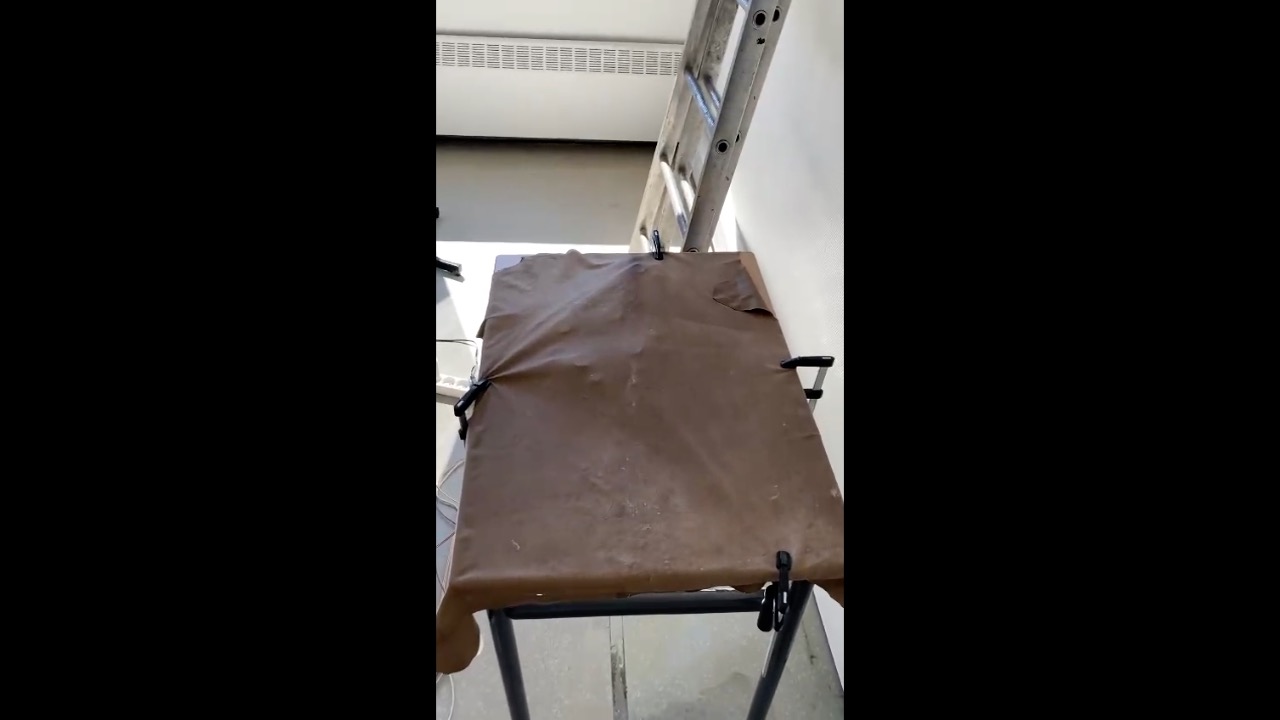

While the piece can also exist simply as audio files, I am developing a sculptural form to “embody” it, embedding speakers in handmade objects using corporeal materials (leather, suede, faux leather, and silicon) but whose form abstractly references bodies. The speakers within these membranous objects will cause the “skin” of the sculptural creature to move slightly and to transmit sound. These objects will speak the dreams.

The project is a true research-creation project in that it is experimental and I am not sure what it will discover. It tests the powers and boundaries of these emerging tools, to more deeply understand them through creative process, and to share that experimentation and inquiry with a wider public. While most Artificial Intelligence is evaluated based on how well it mimics patterns in human data, this project interrogates other values. Natural Language Processing algorithms select letters and words based on the statistical probability that one will follow another. In general, much of the critique of “algorithmic bias” actually has to do with the ways in which Machine Learning is only capable of revealing and reiterating existing patterns in training data. (Leading to cases such racist recidivism prediction decisions, which indicate that it is more likely for people living in lower-income zip codes to re-commit crimes, etc.) Such forms of “intelligence” may be incapable of imagining outcomes other than those which have previously occurred.

Dreams, by contrast, are rather unpredictable and do not correspond linear narratives (although dream journals do in some respects). Furthermore, contemporary aesthetic values have less to do with fidelity or realism, and find interest in a creative consciousness for other reasons. This allows us, as humans, to more clearly articulate the relationship of (or delineation between) technique and creativity. While an AI might be valued for its capacity to mimic reality, we look to human creativity for its capacity to deviate from what has come before.

The piece is informed by my scholarly research, which examines the use of AI for “care work” (such as psychotherapy) and the attempt to “code” human affect, especially vocal affect. The proposed piece is another way of investigating this question of whether or not machines can ever possibly understand human emotion, or have an unconscious. I am interested in how they fail, as it shows us what is un-code-able about the human. In the creation of these artefacts, something uncanny and also new can occur.